While broadcasting live events, the use of multiple cameras allows for a more engaging and dynamic viewer experience. For live broadcasts from sports stadiums or concert venues, a television producer needs to be able to seamlessly switch between video feeds depending on what angle is most suitable at a given time. Typically, a single audio stream is used, as sudden changes in audio are very noticeable and can be distracting. If the video feeds are not synchronized to each other as well as to the main audio stream, switching between cameras can result in issues detrimental to the viewing experience such as input lag or lip sync delay.

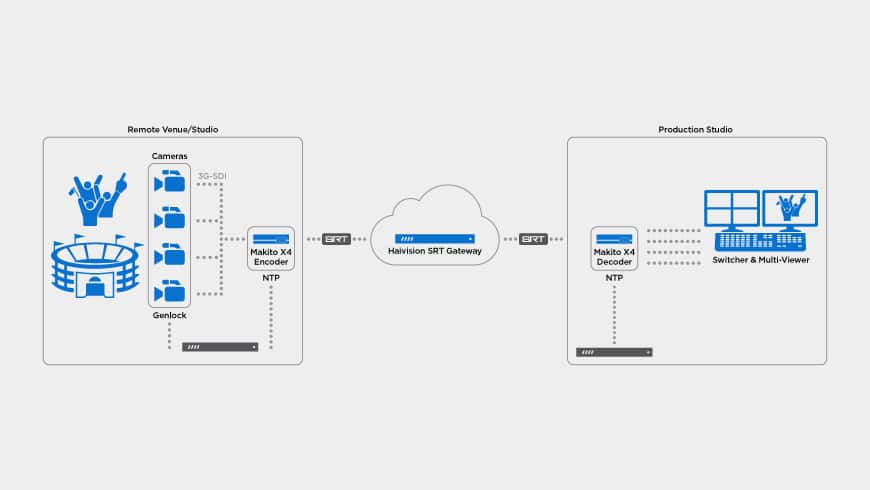

When producing live content using remote video, decoders receiving the live feeds need to be kept in sync so that a producer can immediately include any of the sources within their live broadcast workflow. There are several ways to mitigate multi-camera and audio sync issues over long distances including multiplexing camera feeds over a satellite uplink or using a dedicated private network. Streaming over the internet is more cost-effective and flexible, however it can be unpredictable as round-trip times and bandwidth availability can continually fluctuate.

To address these issues, technologies such as Stream Sync from Haivision, apply a short buffer at the receiving end so that decoders can realign the video streams based on the embedded timecode generated by the synchronized encoders on location. Being able to sync remote video streams over the public internet is more cost effective and flexible than using satellite or private managed networks. It enables any type of broadcaster to live stream events with multiple camera angles from any location with broadband internet access.